Note: This was originally a series of obnoxiously long comments on this post at BoxscoreGeeks.com. If somehow you’ve arrived here without having previously visited that site, you should go there, it’s really good!

There’s no real back and forth, so I’m preserving the posts as originally written without extra editing with the exception of converting the dynamic posting time indicators to something that makes since on a static blog. The times are approximate, and refer to the date this was published 2015 June 4.

At Noon Shawn_Furyan wrote

OK, so you got me. I’ve spent all morning looking at this research. I eventually ended up reading all of Miller and Sanjurjo 2014 after skimming the study cited in your quote: Miller and Sanjurjo 2015.

I did a heavy skim of the later study, and realized that its conclusions were essentially completely dependent on the statistical methodology developed in the earlier study. After reading the earlier study and rereading the abstract and introduction to the later study, my preliminary conclusion is that this research really only applies to repeated shots from a particular location. The first study was a controlled study that looked at shots from a specific point designed on an individual shooter level to be made at a rate of 50%. They did multiple 300 shot sessions with several players on a single Euro team.

They conjectured that the results applied to game situations based on significantly less rigorous evidence. Unless the 2015 study controls for the potential stationary shooter effect in a way not foreshadowed by its introduction, then really all of the new evidence will lack controls for the stationary shooter effect, and so really can’t be inferred for game situations.

In the 2014 study, they do a good job discussing this issue in a kind of buried section, but IMO, their conclusion and abstract don’t discount the claims appropriately (meaning they didn’t find evidence that Kobe can just have a stupid hot streak in a game, when I think that it’s pretty clear that this is the takeaway many people, particularly reporters, are likely to pull from reading those portions of the study).

One situation where the effect could potentially apply is that thing where Curry will try to do a quick pullup from the spot he just hit from on the last possession. Based on the evidence I’ve seen here, I’d recommend trying to keep him from doing that if you’re defending him. This is a conjecture, but it’s closer to the ‘demonstrated’ ‘hot hand effect'[1] than the conjecture by the authors that the effect is general for particular shooters.

That’s the other thing. A primary aspect of the proposed effect is that the authors assume that it’s not really a wide-spread phenomenon. Rather they think that they have identified that particular players are able to get significantly hot, but that most players don’t or that the effect is very subdued in most players.

[1] I would note that the 2015 study will be simultaneously discredited if the 2014 study gets discredited, and that the 2014 study has not been replicated as far as I can tell.

.

At Noon Shawn_Furyan wrote

I’ll note that I’m not saying anything about the statistical methodology. They don’t look particularly convoluted, and shouldn’t take a huge amount of statistical expertise to verify most of the methods, but that would have taken a lot more time and I’ve already devoted a lot of time just examining the claims.

Shawn_Furyan wrote 5 hours ago

That is to say that my first comment should be taken as an interpretation of what we will have evidence for should the statistical methods of the 2014 study stand up to significant scrutiny. I think that this already diverges significantly from what people are likely taking away from the reporting on the studies in question.

At 3PM Shawn_Furyan wrote

I’m in the middle of the second study now. I REALLY don’t like one of their citations to the previous study. I would say that they are mischaracterizing the evidence, which I actually read earlier today:

“Miller and Sanjurjo (2014) [edit: to be clear, this is a self reference, so it’s not plausible that they just misunderstand the findings they are citing] find, in a detailed questionnaire administered to the expert players who participated in their controlled shooting experiment (and did not observe each others’ shooting sessions), that not only do all of the eight players believe that many of their teammates generally shoot better when on a streak of hits, but only one player believed that all of them do. Further, both their ratings (-3 to +3), and rankings, of how well their teammates shoot when on a hit streak are highly correlated with the actual shooting performance of the teammates in Miller and Sanjurjo’s experiment. When this evidence is taken together with the fact that expert coaches and players have much more information to go by than simply whether a teammate has made or missed the last several shots (e.g. how cleanly shots are entering or missing, the player’s shooting mechanics, body language, etc.), what is suggested is that not only can experts identify which shooters have a greater tendency to get the hot hand, but that they may also sometimes be able to tell the difference between a lucky streak of hits and one that is instead due to an elevated state of performance.”

OK, so 8 dudes collectively, but with significant disagreement, identified the ONE SINGLE GUY on their team who’s probably the main shooting threat as most likely to get a hot hand. One of the 8 players gave everyone the same ranking. In the original article, this is presented as a corroborating sort of sanity check (though they don’t discuss alternative hypotheses like the one I just presented, that the agreement is mainly driven by identifying who the shooter on the team is), and kind of spin that into a conjecture that it’s plausible that this means that the study authors have identified an effect that exists in in-game scenarios. I didn’t take much exception to that, but citing it in another paper as if it’s some sort of significant finding, and stripping out the context is laundering the result of it’s lack of rigor. They even take pains to couch the citation in authoritative language: ‘a detailed questionnaire administered to the expert players’, ‘controlled shooting experiment’, ‘highly correlated with’ (remember, n = 8 with one of those possibly not being taken seriously), as well as language that downplays the lack of substance: ‘only one player believed that all of them [benefit from the hot hand][or alternatively, one of them just picked ‘3’ across the board because questionnaires are annoying], spelling out ‘eight’ (as opposed to ‘n=8’) which is kind of easy to miss when reading because of the density, but which is also completely unscannable, and then finally DOUBLING the length of the section by spouting a bunch of completely unsubstantiated theorizations in order to bolster the perceived effect!

I take huge exception to that.

At 4PM Shawn_Furyan wrote

I guess I was closer to the end than I thought, and the appendices weren’t significant. Yeah… I don’t really see the 2015 study as really adding anything of note.

They seemed to recognize in the 2014 paper that having to shoot against a defense and from different locations is a confounding variable, but then they kind of hand wave that away. I would have expected a followup to confront that issue directly, but instead, the produced essentially more of the same, and then doubled down on and ballasted the hand waving.

Also, they haven’t really dealt with a warm up effect directly, they just observed that in some of their trials that it took about 2 shots for everyone to hit their average shooting efficiency. But in games, shots are much scarcer, and there’s a lot more chaos, so I don’t think that the 2 shot warmup period is directly transferrable to game situations.

They also acknowledge, but don’t really take on, the issue that players shoot less efficiently in the beginning of the season.

These are two major effects that could explain any findings of clustered of makes beyond what one would expect if a players shooting efficiency was constant over time. In light of those unanswered issues their attempts to transfer the effect to game situations are very weak, and honestly the papers would have been better if they’d put a lot less emphasis on trying to stretch their research to cover that case. The research just doesn’t address the situation in any meaningful way.

At 5PM Shawn_Furyan wrote

Another thing that I haven’t looked into at all, but which gives me pause, is that in both papers, the authors essentially slam everybody else who’s studied the effect, including explicitly calling out Amos Tversky (who’s obviously no whippersnapper), and tout their pretty simple metrics as the solution to the errors in all of the previous research. Their (in english) claim is essentially that past methods weren’t sensitive enough to clustering, and that the methods developed in their 2014 study are tuned just right to catch the signal [presumably while filtering out the noise adequately]. The number observations in the two studies look on their face to be pretty high, but overall they include only 41 players, and in neither study can the case be made that the player samples are particularly representative of general populations of basketball players within particular leagues, et cetera. The first study has an alarmingly small population of players (n=8), and second has selection criteria so stringent that it only finds 33 candidates that have met the criteria since the mid 80s (and of course we’re talking about the 3 point competition, so we’re already selecting for the most effective shooters). I don’t recall any indication of how many 3 point shooters were selected against, and I’m not sure that it even makes sense to do a second selection if you’re already selecting specifically for the best shooters. Also, in the second study, the median number of observations was like 143, but the biggest outlier, craig hodges was among the hot handiest, but at 454 observations, puts him 4 standard deviations above the median. The researchers report only the average in the study, and use the average to come up with their conclusions, but I do wonder if that one player is significantly positively skewing the distribution.

I also wonder if the study wouldn’t be significantly improved by not filtering out shooters that are already supposedly very effective, but rather looking at all of the shots so that the potential ‘hot hand’ shots are presented in terms of all shots rather than isolating them out on some pretty darn synthetic criteria. I’m not 100% positive that this would be an improvement, but the authors don’t attempt to justify their decision to filter out players from an already highly specific non-representative sample.

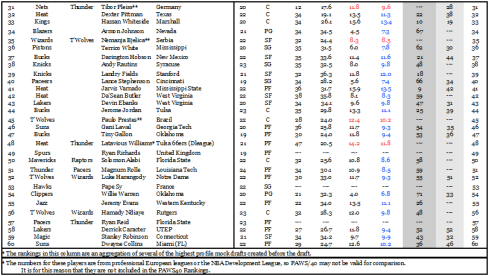

One last thing… the authors take pains in both studies to insist that their findings are the ones that are consistent with conventional wisdom (which I guess is assumed to be consistent as well… they don’t really address that…). But the findings of the second study really don’t strike me as consistent with conventional wisdom once you get past the headline. So… the ordering of their hot handedness metric doesn’t seem to be in any way predictable ahead of time. Reggie Miller is apparently inverse hot handed, Peja is the most inverse hot handed… OK Glibert Arenas seems to make sense as being among the inverse hot hand group, but Dirk Nowitzki has less hot handiness than Glen Rice? I’m cherry picking a little, and I’m sure you could make a case for yourself after seeing it, but look on page 13[2]. I mean, is your head nodding vigorously?

[2] Higher on the list means hotter on the manos:

Link to the paper